Extract table data with SmolVLM-256M-Instruct

What is the SmolVLM?

As its name suggests, SmolVLM is a small Vision Language Model. Its features are a small memory footprint and high speed operation. The Huggingface page states:

Its lightweight architecture makes it suitable for on-device applications while maintaining strong performance on multimodal tasks. It can run inference on one image with under 1GB of GPU RAM.

https://huggingface.co/HuggingFaceTB/SmolVLM-256M-Instruct

A lightweight model like this is one of the things we need right now, so we tried it.

Why is SmolVLM both memory efficient and fast?

The reason SmolVLM is memory-efficient and fast is mainly due to its architecture and design.

Image Encoding Efficiency

SmolVLM encodes each 384x384 pixel image patch into 81 tokens, which allows for a very compact representation of image information compared to other models. For example, when processing a test prompt and a single image, SmolVLM uses only 1.2k tokens, while Qwen2-VL uses 16k tokens. This difference translates into a significant difference in memory usage.

Compression by pixel shuffling strategy

SmolVLM uses a pixel shuffling strategy to compress image information, which compresses image information by 9 times compared to the base architecture Idefics3, significantly reducing the memory footprint.

Reducing the amount of computing

The smaller memory footprint reduces the amount of computation required for model prefilling and generation, resulting in faster processing, with prefill throughput 3.3-4.5x faster than Qwen2-VL and generation throughput 7.5-16x faster.

Extract table data from image

Again, I tried to extract all records from table_eng.jpg. The code is as follows:

!nvidia-smi

!pip cache purge

!pip uninstall -y bitsandbytes

!pip uninstall -y transformers

!pip uninstall -y torch torchvision

!pip install -q torch torchvision

!pip install -q transformers accelerate

!pip install -q flash_attn

!pip install pillow

import torch

from PIL import Image

from transformers import AutoProcessor, AutoModelForVision2Seq

from transformers.image_utils import load_image

DEVICE = "cuda" if torch.cuda.is_available() else "cpu"

# Load images

image = load_image("https://raw-fi-data.com/static/dfa2c3baae4dc832d1b464422be9268d/e7ebb/table_eng.jpg")

# Initialize processor and model

processor = AutoProcessor.from_pretrained("HuggingFaceTB/SmolVLM-256M-Instruct")

model = AutoModelForVision2Seq.from_pretrained(

"HuggingFaceTB/SmolVLM-256M-Instruct",

torch_dtype=torch.bfloat16,

_attn_implementation="flash_attention_2" if DEVICE == "cuda" else "eager",

).to(DEVICE)

# Create input messages

messages = [

{

"role": "user",

"content": [

{"type": "image"},

{"type": "text", "text": "Show all records in this image"}

]

},

]

# Prepare inputs

prompt = processor.apply_chat_template(messages, add_generation_prompt=True)

inputs = processor(text=prompt, images=[image], return_tensors="pt")

inputs = inputs.to(DEVICE)

# Generate outputs

generated_ids = model.generate(**inputs, max_new_tokens=2000)

generated_texts = processor.batch_decode(

generated_ids,

skip_special_tokens=True,

)

for txt in generated_texts:

print(txt)

print("\n")

The gist is here:

When I ran this, I got the following result:

User:

Show all records in this image

Assistant: The image is a table that lists the names of various buildings in different locations. The table is organized into rows and columns, with each row representing a different building and the corresponding column representing the address. The table is structured in a way that allows for easy comparison of the names of the buildings across different locations.

Here is the table converted to markdown format:

```markdown

| Building Name | Address |

|----------------|-------|

| 13th Street | 47 W 13th St, New York, NY 10011, USA |

| 20 Cooper Square | 20 Cooper Square, New York, NY 10003, USA |

| 2nd Street Dorm | 1 E 2nd St, New York, NY 10003, USA |

| 3rd North | 75 3rd Ave, New York, NY 10003, USA |

| 6 Metrotech Center | 721 Broadway, New York, NY 10003, USA |

| 7th Street | 40 E 7th St, New York, NY 10003, USA |

| 838 Broadway | 69 W 9th St, New York, NY 10011, USA |

| 9th St. | 69 W 9th St, New York, NY 10011, USA |

| 19 Washington Square | 69 W 9th St, New York, NY 10011, USA |

| 119 Washington Square | 69 W 9th St, New York, NY 10011, USA |

| 371 7th Ave, New York, NY 10001, USA |

| 33 3rd Ave, New York, NY 10003, USA |

| 70 Washington Square South, New York, NY 10012, United States |

| 70 3rd Ave, New York, NY 10003, United States |

| 55 East 10th Street, New York, NY 10003, United States |

| 55 East 10th Street, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 10003, United States |

| 400 3rd Ave, New York, NY 100

It works fine up to a point, but around "838 Broadway" the address output becomes strange, and in the end "400 3rd Ave, New York, NY 10003, United States" is repeated.

It seems that SmolVLM is not good at extracting records from tables in images.

Memory usage

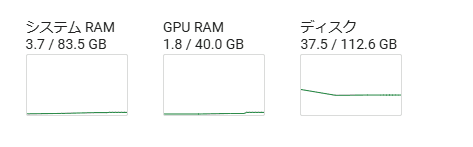

This is the memory and disk usage displayed in Google Colab when I ran the above code. From the left, it's System RAM, GPU RAM, and Disk. (Sorry, this is not in English.)

You can see that SmolVLM operates with a really small memory footprint.

Conclusion

I tried SmolVLM-256M-Instruct. This code worked on the CPU, but it took a long time to respond, so I recommend using A100 GPU.

At this time, this model is not able to accurately extract all the records in the table, but there is a more advanced model called SmolVLM-500M-Instruct, so I would like to continue trying it out a little more.